R Losses Regression

R/ml_regression_linear_regression.RRidge regression is an extension of linear regression where the loss function is modified to minimize the complexity of the model. This modification is done by adding a penalty parameter that is equivalent to the square of the magnitude of the coefficients. Loss function = OLS + alpha. summation (squared coefficient values). Logistic Regression. If linear regression serves to predict continuous Y variables, logistic regression is used for binary classification. If we use linear regression to model a dichotomous variable (as Y), the resulting model might not restrict the predicted Ys within 0 and 1. Calculate the Huber loss, a loss function used in robust regression. This loss function is less sensitive to outliers than rmse.This function is quadratic for small residual values and linear for large residual values.

Perform regression using linear regression.

Arguments

| x | A |

|---|---|

| formula | Used when |

| fit_intercept | Boolean; should the model be fit with an intercept term? |

| elastic_net_param | ElasticNet mixing parameter, in range [0, 1]. For alpha = 0, the penalty is an L2 penalty. For alpha = 1, it is an L1 penalty. |

| reg_param | Regularization parameter (aka lambda) |

| max_iter | The maximum number of iterations to use. |

| weight_col | The name of the column to use as weights for the model fit. |

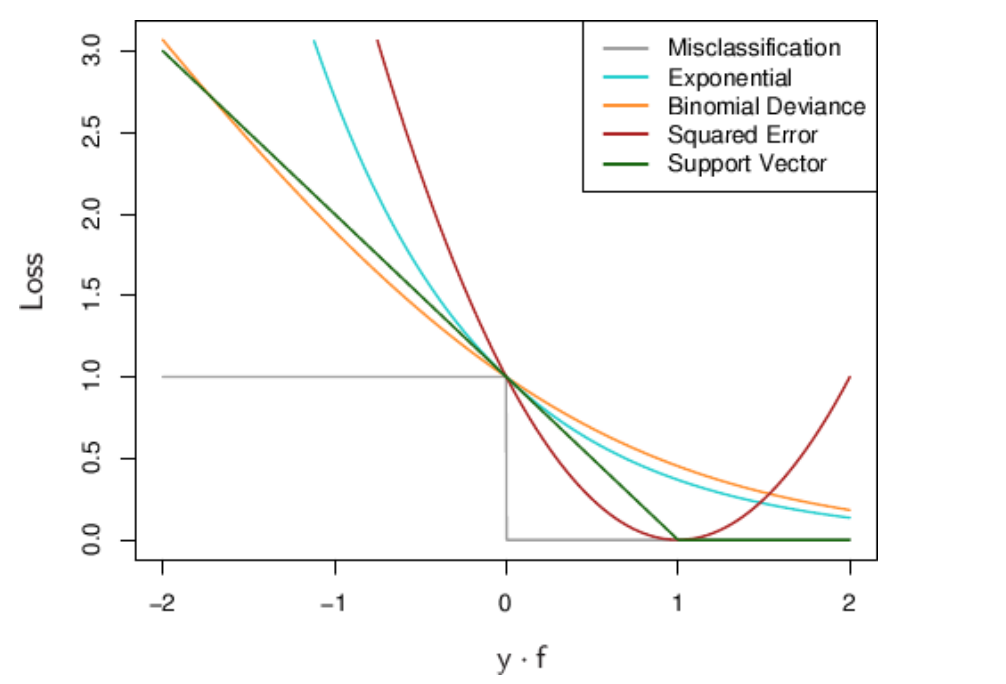

| loss | The loss function to be optimized. Supported options: 'squaredError'and 'huber'. Default: 'squaredError' |

| solver | Solver algorithm for optimization. |

| standardization | Whether to standardize the training features before fitting the model. |

| tol | Param for the convergence tolerance for iterative algorithms. |

| features_col | Features column name, as a length-one character vector. The column should be single vector column of numeric values. Usually this column is output by |

| label_col | Label column name. The column should be a numeric column. Usually this column is output by |

| prediction_col | Prediction column name. |

| uid | A character string used to uniquely identify the ML estimator. |

| ... | Optional arguments; see Details. |

Value

The object returned depends on the class of x.

spark_connection: Whenxis aspark_connection, the function returns an instance of aml_estimatorobject. The object contains a pointer to a SparkPredictorobject and can be used to composePipelineobjects.ml_pipeline: Whenxis aml_pipeline, the function returns aml_pipelinewith the predictor appended to the pipeline.tbl_spark: Whenxis atbl_spark, a predictor is constructed then immediately fit with the inputtbl_spark, returning a prediction model.tbl_spark, withformula: specified Whenformulais specified, the inputtbl_sparkis first transformed using aRFormulatransformer before being fit by the predictor. The object returned in this case is aml_modelwhich is a wrapper of aml_pipeline_model.

Details

When x is a tbl_spark and formula (alternatively, response and features) is specified, the function returns a ml_model object wrapping a ml_pipeline_model which contains data pre-processing transformers, the ML predictor, and, for classification models, a post-processing transformer that converts predictions into class labels. For classification, an optional argument predicted_label_col (defaults to 'predicted_label') can be used to specify the name of the predicted label column. In addition to the fitted ml_pipeline_model, ml_model objects also contain a ml_pipeline object where the ML predictor stage is an estimator ready to be fit against data. This is utilized by ml_save with type = 'pipeline' to faciliate model refresh workflows.

See also

R Loess Regression

See http://spark.apache.org/docs/latest/ml-classification-regression.html for more information on the set of supervised learning algorithms.

Other ml algorithms: ml_aft_survival_regression(),ml_decision_tree_classifier(),ml_gbt_classifier(),ml_generalized_linear_regression(),ml_isotonic_regression(),ml_linear_svc(),ml_logistic_regression(),ml_multilayer_perceptron_classifier(),ml_naive_bayes(),ml_one_vs_rest(),ml_random_forest_classifier()

Examples

| loess {stats} | R Documentation |

Local Polynomial Regression Fitting

Description

Fit a polynomial surface determined by one or more numericalpredictors, using local fitting.

Usage

Arguments

formula | a formula specifying the numeric response andone to four numeric predictors (best specified via an interaction,but can also be specified additively). Will be coerced to a formulaif necessary. |

data | an optional data frame, list or environment (or objectcoercible by |

weights | optional weights for each case. |

subset | an optional specification of a subset of the data to beused. |

na.action | the action to be taken with missing values in theresponse or predictors. The default is given by |

model | should the model frame be returned? |

span | the parameter α which controls the degree ofsmoothing. |

enp.target | an alternative way to specify |

degree | the degree of the polynomials to be used, normally 1 or2. (Degree 0 is also allowed, but see the ‘Note’.) |

parametric | should any terms be fitted globally rather thanlocally? Terms can be specified by name, number or as a logicalvector of the same length as the number of predictors. |

drop.square | for fits with more than one predictor and |

normalize | should the predictors be normalized to a common scaleif there is more than one? The normalization used is to set the10% trimmed standard deviation to one. Set to false for spatialcoordinate predictors and others known to be on a common scale. |

family | if |

method | fit the model or just extract the model frame. Can be abbreviated. |

control | control parameters: see |

... | control parameters can also be supplied directly(if |

Details

Fitting is done locally. That is, for the fit at point x, thefit is made using points in a neighbourhood of x, weighted bytheir distance from x (with differences in ‘parametric’variables being ignored when computing the distance). The size of theneighbourhood is controlled by α (set by span orenp.target). For α < 1, theneighbourhood includes proportion α of the points,and these have tricubic weighting (proportional to (1 - (dist/maxdist)^3)^3). Forα > 1, all points are used, with the‘maximum distance’ assumed to be α^(1/p)times the actual maximum distance for p explanatory variables.

Loess Regression Smoothing R

For the default family, fitting is by (weighted) least squares. Forfamily='symmetric' a few iterations of an M-estimationprocedure with Tukey's biweight are used. Be aware that as the initialvalue is the least-squares fit, this need not be a very resistant fit.

It can be important to tune the control list to achieve acceptablespeed. See loess.control for details.

Value

An object of class 'loess'.

Note

As this is based on cloess, it is similar to but not identical tothe loess function of S. In particular, conditioning is notimplemented.

The memory usage of this implementation of loess is roughlyquadratic in the number of points, with 1000 points taking about 10Mb.

degree = 0, local constant fitting, is allowed in thisimplementation but not documented in the reference. It seems very littletested, so use with caution.

Author(s)

Loess Regression R Span

B. D. Ripley, based on the cloess package of Cleveland,Grosse and Shyu.

Source

R Losses Regression Calculator

The 1998 version of cloess package of Cleveland,Grosse and Shyu. A later version is available as dloess athttps://www.netlib.org/a/.

References

W. S. Cleveland, E. Grosse and W. M. Shyu (1992) Local regressionmodels. Chapter 8 of Statistical Models in S eds J.M. Chambersand T.J. Hastie, Wadsworth & Brooks/Cole.

See Also

loess.control,predict.loess.

lowess, the ancestor of loess (withdifferent defaults!).